Despite carrying a few years between us, the popularity of smartwatches has not had the overwhelming response that many expected, this mostly by the limitations that still presents, as is a device that depends on our smartphone, a screen additional we avoid having to be pulling out the phone with each notification, although there are some attempts to make it more independent.

Another point that has not helped in the rapid adoption is the theme of the screen and limiting to interact with applications within such a small space, something that developers have met and few have been able to solve. But now we face an interesting project that seeks to solve this, making our skin serves as a touch pad to extend the capabilities of our smartwatch.

SkinTrack, a solution to the small screens

A group of department ‘Interfaces of the Future’ within Carnegie Mellon University has developed technology that makes our skin becomes a controller that will allow us to move through the options and menus on the screen of a smartphone, but without touch, and the clock will be able to recognize the touch and movement we make on our skin.

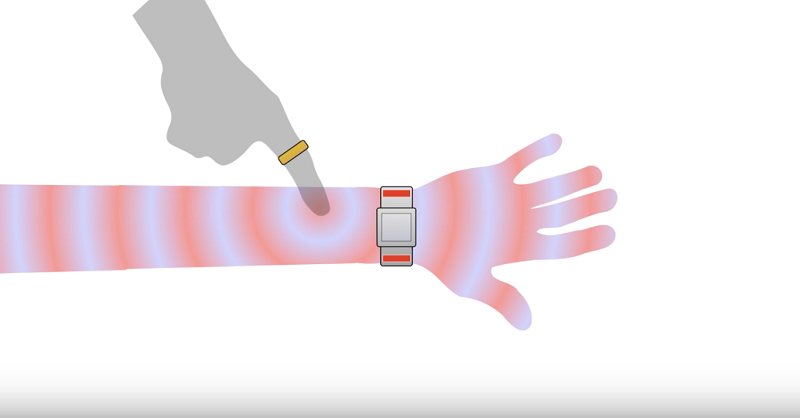

The objective of this project is to create new detection technologies and interface to make interaction between humans and computers more fluid, intuitive and powerful. The system is based on a ring that emits signals finger movements, which are transmitted to a band connected to the clock detection; when the finger wearing the ring touches the skin where is the band with the clock, sends a high frequency signal through the arm and is the band who determines, through electrodes, the position of the finger and the action being performed.

You may also like to read another article on iMindsoft: InFocus has a new mini computer Kangaroo: Doubles in size, also possibilities

The system is able to detect actions such as dragging, short or long touches, shifts up or down, just as Sideways, there is even the option of using parts of the skin as shortcuts, for example, we can be programmed to the touch the elbow certain applications to open or run a particular action; it is also able to recognize gestures that allow us to “draw” on our skin to perform these actions.

We are just before a project without plans marketed in the short or medium term, plus it still has some flaws as an average error of 7.6 millimeters, but with touches accuracy of 99%. Note that companies like Microsoft, NSF, Disney, Google and Qualcomm are sponsoring this project, so probably in the not too distant future we will see the first commercial applications of this technology. Visit here http://gamesplanet.org/ for more technology news.